A Discussion About Eye Tracking and the Potential to Advance Readability Research

Readability Matters recently Zoomed with Alexandra Papoutsaki, an Assistant Professor in the Department of Computer Science at Pomona College. We learned more about Alexandra’s research, her development of webcam-based eye tracking, and how it can advance readability research.

Tell us more about your research. What is the potential long-term impact?

My background is in Computer Science, and I work in the area of Human-Computer Interaction. My research focuses on the methodology of eye tracking, seeking ways to both develop eye tracking technology and make use of it.

Eye tracking is a broad term that refers to processes that allow us to monitor a person’s eye activity. Knowing where someone looks and decoding their eye movements can tell us a lot about their attention and focus. A lot of different domains are interested in eye activity: cognitive science, psycholinguistics, education, marketing, usabilities studies are just a few such examples. But despite the many potential insights we can gain from eye tracking, it is still not widely used. One of the main reasons is that eye tracking is primarily achieved with dedicated devices and software that cost tens of thousands of dollars. Not every research lab or company can afford them, and when they do, they are confined in lab spaces where experts run experiments on a small number of participants.

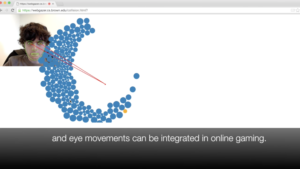

For a while now, I have been working toward democratizing eye tracking and making it more widely used and accessible. As part of my Ph.D. at Brown University, I worked with other researchers to develop eye tracking technology that uses webcams. Webcams have the advantage of being widely accessible; virtually all modern laptops have integrated webcams, and even desktop computer users are likely to own one. By combining video feed from the webcams along with computer vision techniques that allow for the detection of the face and the eyes of a person sitting in front of a webcam, we developed WebGazer. WebGazer is an eye tracking JavaScript library that can be added to any website, and upon user consent, it starts tracking their eye movements within that page. There are sacrifices in the accuracy that such a solution can achieve compared to traditional eye tracking devices, but certain advantages make it fit for many applications. It is free and open-source, and there is no need for any special hardware or software. Anyone that wants to research eye movements, regardless of their domain, can set up a website and remotely recruit and have people participate in far more realistic settings than would have been achieved in a lab. There are endless possibilities, with readability being one that I have started exploring and so much room for improvement.

Beyond my work on webcam eye tracking, I have become interested in applications of eye tracking technology. Specifically, I have been looking at how we can use eye tracking in collaborative settings. In the era of COVID-19, we are all aware of the importance of remote collaboration. But even before that, we have strong evidence that the modern workplace was already shifting and that more people work and collaborate remotely. As it has become abundantly clear to many of us over the past year, remote collaborations pose many challenges when it comes to being aware of what our collaborators are looking at, doing, talking about.

In the past, eye tracking has been used to study how remote collaborators look at shared artifacts (e.g., a shared online document) and comparing their gaze patterns. My research has been focusing on technology that takes this idea one step further. We are studying shared gaze, a visualization of the collaborator’s gaze activity on one’s screen, similar to seeing the collaborator’s cursor in a Google Document. My lab has been building different tools that allow collaborators to work together while being aware of each other’s point of gaze. We have been studying how shared gaze affects the quality of collaboration in settings such as remote collaborative writing and game solving, and we have seen promising results on how such technology can be leveraged to improve remote collaborations.

When did you learn about the Readability Research Community?

Working in eye tracking, I have been tangentially aware of readability work in certain populations, for example, those with dyslexia or those whose English is a second language. I started hearing about readability for the general population through my connection with Shaun Wallace, who, while doing his Ph.D. at Brown University, has also been researching readability in collaboration with Zoya Bylinskii at Adobe Research. Over the past months, I have been lucky to join a vibrant interdisciplinary community of researchers who bring their different expertise in further understanding the topic and conducting new research around readability.

How do you see readability research connected to your work?

I see it connected to both parts of my research agenda. At an individual level, it would be fantastic to be able to scale up eye tracking experiments where we can track the eye movements of readers. While this has been done before but on a small scale and in lab settings, Webcam eye tracking technology could allow us to expand experiments to a much broader part of the population. The fact that we can now deploy eye tracking experiments on the browser can allow us to easily tweak the parameters of what we study and test different hypotheses. I can also see my work in collaborative settings being relevant to readability. For example, we are currently studying remote collaborative writing. I can envision scenarios where we can study reading comprehension among team members and combine it with behavioral insights.

What was most surprising to you?

I have come to appreciate how prevalent and relevant work on readability is. This shouldn’t come as a surprise, given that I spend most of my day staring at a screen or a book, but I am still amazed by the breadth and depth of the questions that one can ask. Being part of such an interdisciplinary community and seeing how seemingly siloed fields can come together to advance knowledge has been extremely rewarding.

Is there a question we should have asked you but did not?

I have not touched on the ethical implications of my work, but there are numerous such considerations when developing technology. For example, there are privacy concerns when it comes to having access to one’s webcam video feed, even if they have consented. There are ethical questions when it comes to the (in)ability of face tracking technology to detect successfully people from certain racial backgrounds. And there are concerns about what happens when we can track one’s eye movements. For example, if we were to adopt eye tracking in the workplace or classrooms, would we end up policing people’s attention and focus?

ABOUT DR. ALEXANDRA PAPOUTSAKI: Alexandra Papoutsaki is an Assistant Professor in the Department of Computer Science at Pomona College. Her research in Human-Computer Interaction includes topics in remote collaboration, eye tracking, crowdsourcing, and health informatics. Her work has been supported by the National Science Foundation, published in top-tier peer-reviewed conferences and journals, and has been featured in publications such as Fortune Magazine, PCWorld, and FastCompany. Alexandra earned her Ph.D. and master’s degrees in Computer Science from Brown University and her bachelor’s degree in Computer Science from the Athens University of Economics and Business in Greece.

ABOUT DR. ALEXANDRA PAPOUTSAKI: Alexandra Papoutsaki is an Assistant Professor in the Department of Computer Science at Pomona College. Her research in Human-Computer Interaction includes topics in remote collaboration, eye tracking, crowdsourcing, and health informatics. Her work has been supported by the National Science Foundation, published in top-tier peer-reviewed conferences and journals, and has been featured in publications such as Fortune Magazine, PCWorld, and FastCompany. Alexandra earned her Ph.D. and master’s degrees in Computer Science from Brown University and her bachelor’s degree in Computer Science from the Athens University of Economics and Business in Greece.

ABOUT WebGazer: WebGazer.js is an eye tracking library that uses common webcams to infer the eye-gaze locations of web visitors on a page in real-time. Webgazer is developed based on the research originally done at Brown University, with recent work at Pomona College. Learn more here.